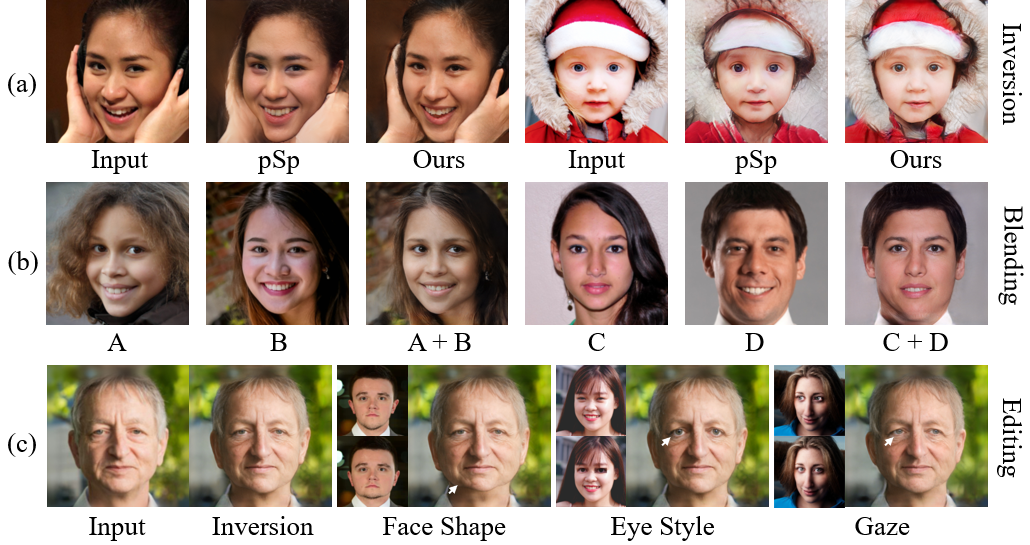

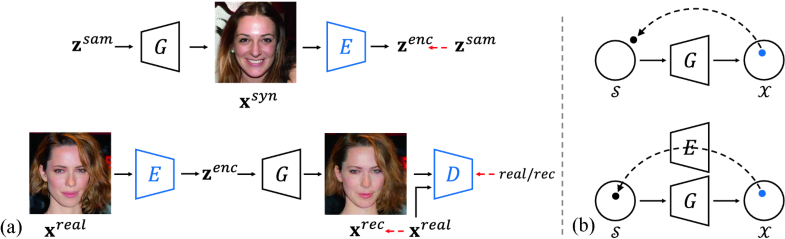

Our method allows (a)

high-fidelity GAN inversion with better spatial details, and enables

two novel applications including (b) face blending and (c) customizing manipulations with one image pair.

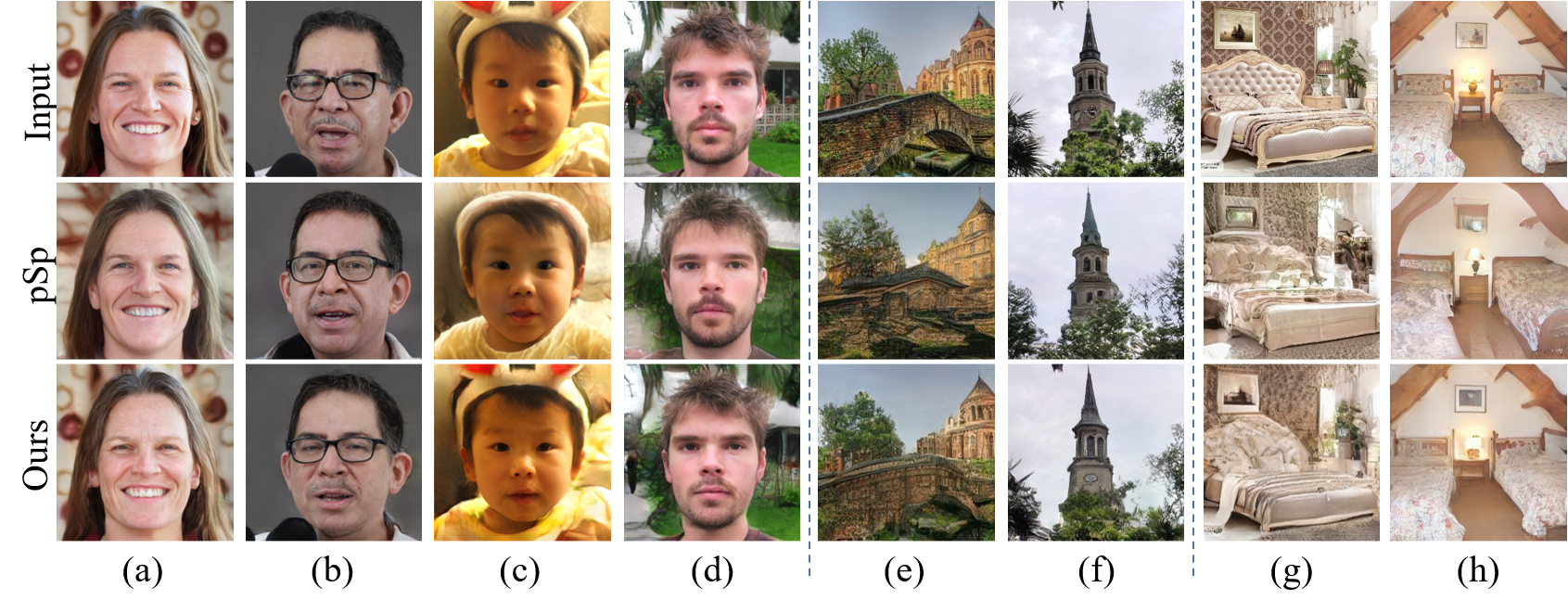

More

inversion results on human faces, outdoor churches, and indoor bedrooms.

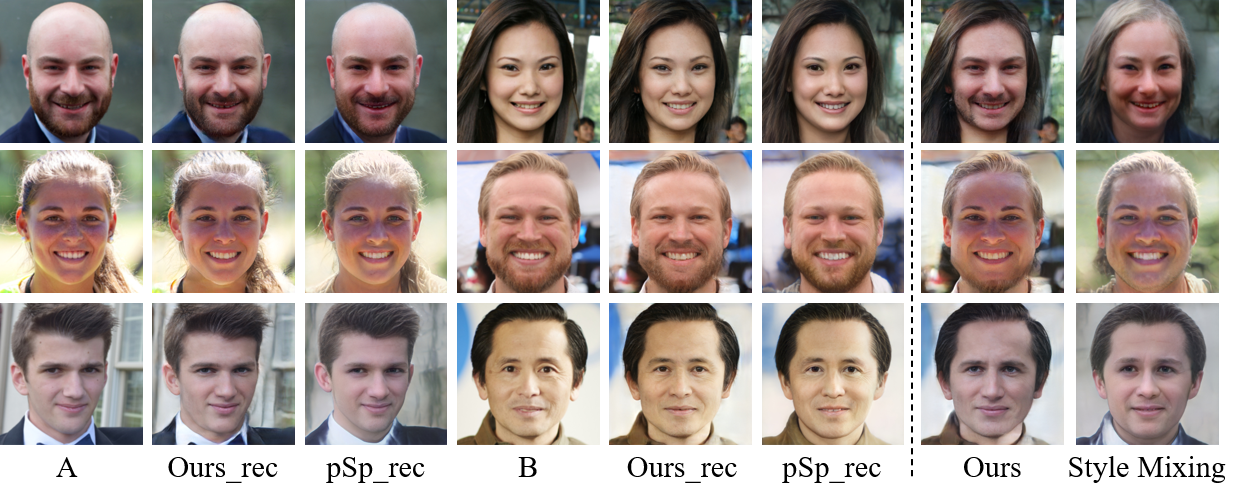

More

face blending results by borrowing face contour from one image and facial details from another.

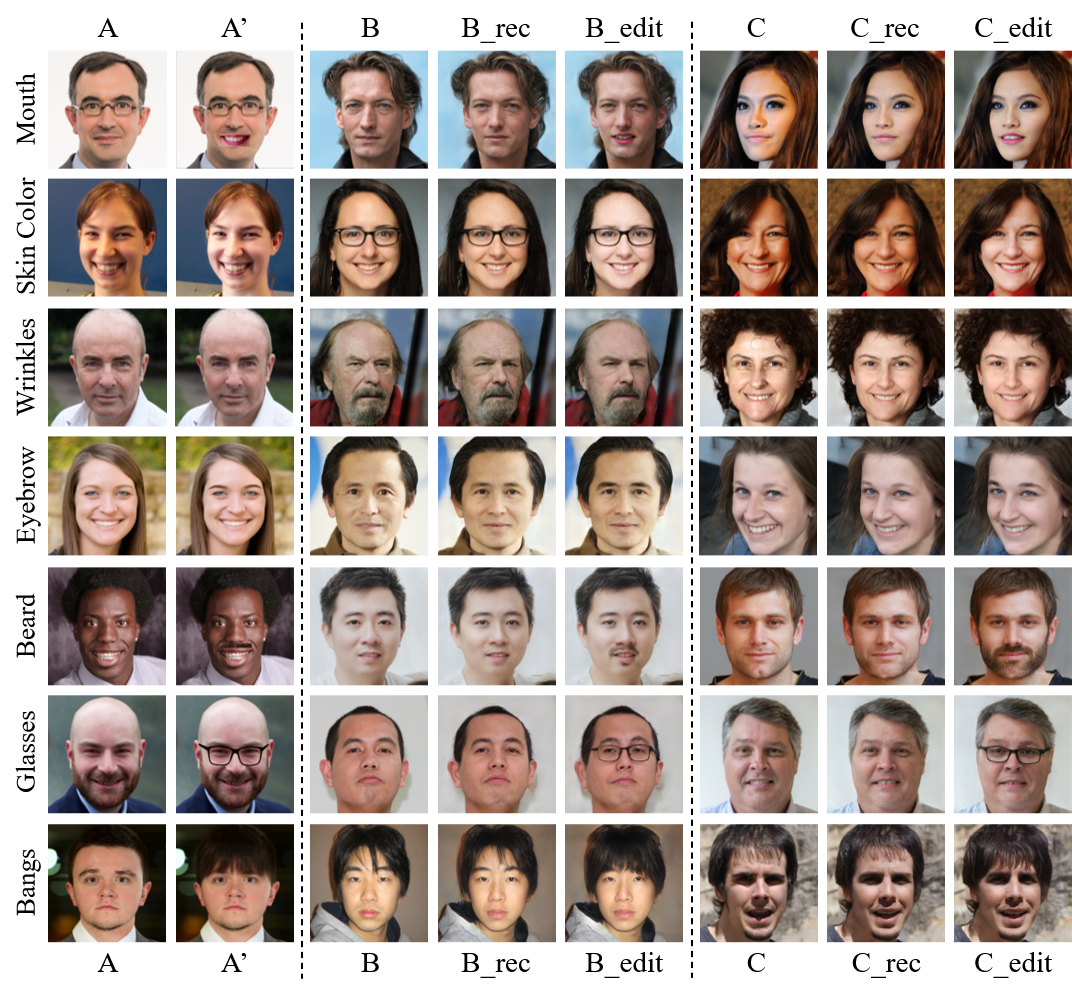

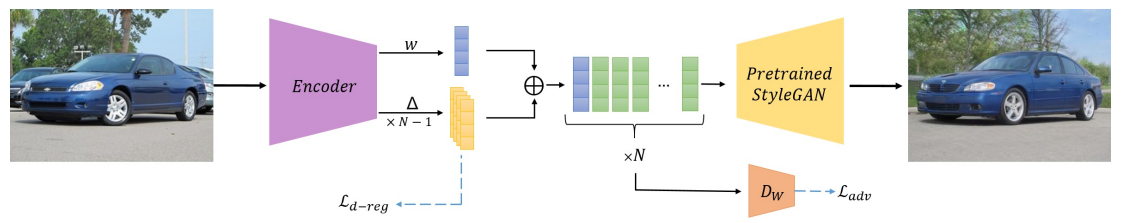

More

customized editing results, where each manipulation is defined by a simple image pair. Such a pair can be created highly efficiently either by graphics editors (like Photoshop) or by the convenient copy-and-paste.

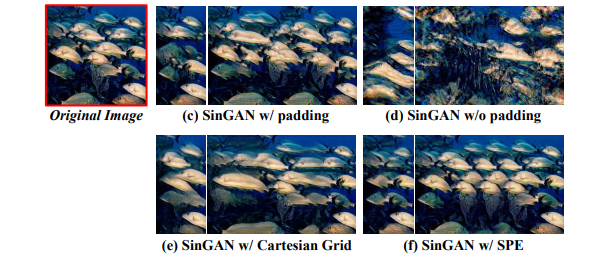

Comment: Proposes positional encoding is indispensable for generating images with high fidelity and zero-padding is not sufficient.